Facial Expression Recognition With Machine Learning and Assessment of Distress in Patients With Cancer

Objectives: To estimate the effectiveness of combining facial expression recognition and machine learning for better detection of distress.

Sample & Setting: 232 patients with cancer in Sichuan University West China Hospital in Chengdu, China.

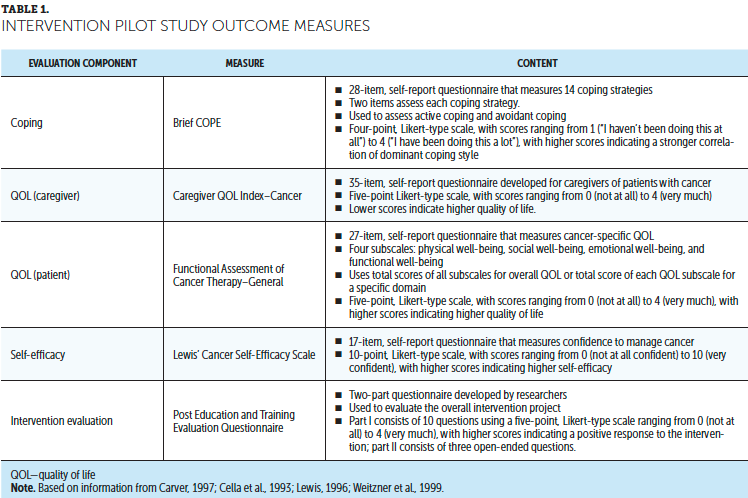

Methods & Variables: The Distress Thermometer (DT) and Hospital Anxiety and Depression Scale (HADS) were used as instruments. The HADS included scores for anxiety (HADS-A), depression (HADS-D), and total score (HADS-T). Distressed patients were defined by the DT cutoff score of 4, the HADS-A cutoff score of 8 or 9, the HADS-D cutoff score of 8 or 9, or the HADS-T cutoff score of 14 or 15. The authors applied histogram of oriented gradients to extract facial expression features from face images, and used a support vector machine as the classifier.

Results: The facial expression features showed feasible differentiation ability on cases classified by DT and HADS.

Implications for Nursing: Facial expression recognition could serve as a supplementary screening tool for improving the accuracy of distress assessment and guide strategies for treatment and nursing.

Jump to a section

Distress is a continuous process of unpleasant experience, which could be a normal emotional response of sadness, fear, and fatigue, but it may also deteriorate into depression, anxiety, panic, and other mental crises without early diagnosis and intervention (Riba et al., 2019). Many factors, such as psychological, social, and spiritual environment, are involved in the pathogenesis of distress (Alfonsson et al., 2016). Distress is common in patients with cancer, and it was estimated in Zabora et al. (2001) that 35% of 4,496 patients with cancer had significant distress, particularly those with lung (43%) and brain (43%) cancer. In addition, distress has serious negative effects on treatment adherence, symptom severity, therapeutic effect, quality of life, and prognosis in patients with cancer (Batty et al., 2017; Grassi et al., 2015; Mitchell et al., 2011). Therefore, prompt screening and diagnosis of distress is of essential interest for enhancing multiple aspects of quality of life for patients with cancer (Carlson et al., 2010).

Assessment for distress consist of contact and non-contact approaches (Gavrilescu & Vizireanu, 2019). The contact-based methods use specific sensors to contact the body to measure different parameters, such as electroencephalogram (Li et al., 2019) and electrodermal activity (Sarchiapone et al., 2018), which are more precise but require more cost and effort. Because oncologists are often less sensitive and efficient in detecting patient distress during busy clinical work (Hedström et al., 2006; Söllner et al., 2001), rapid and effective screening tools are needed to improve the efficiency of diagnosing distress. The non-contact measurements include questionnaires, facial analysis, and speech analysis. For example, the National Comprehensive Cancer Network (NCCN, 2020) Distress Thermometer (DT), a single-item self-report questionnaire, is simple to measure and uses the more acceptable nonpathologic term “distress” (Riba et al., 2019). However, distress is a subjective experience and feeling, and patients may not detect it, or they may hide their true psychological state during the self-assessment, which increases the difficulty of diagnosis (Sood et al., 2013). In addition, questionnaire methods have several limitations; therefore, it is of great significance to develop novel approaches to assist the routine diagnosis and monitoring of distress.

Facial expression recognition has advantages of being nonintrusive, cost-effective, and convenient. Computer vision is the study of automatic extraction, processing, and understanding of information from digital images or videos (Klette, 2014). Machine learning is the practice of computer algorithms to make judgments learning from training data without explicit programming (Rajkomar et al., 2019). With the development of machine learning and computer vision techniques, the accuracy of facial expression recognition continuously improves and progresses toward intelligent direction (Prkachin, 2009). The extraction and classification of facial features are the most important procedures of expression recognition. The common algorithms of feature extraction contain a histogram of oriented gradient (HOG) (Calvillo et al., 2016), local binary patterns (Maturana et al., 2011), and active appearance model (AAM) (Cohn et al., 2009). HOG is a feature descriptor used to describe the local gradient features of images, which has been applied in pedestrian detection (Watanabe et al., 2010) and face recognition (Déniz et al., 2011; Salhi et al., 2012). HOG has comparable performance with other descriptors and lower computational complexity, which facilitate its application in real-time image processing (Salhi et al., 2012).

For feature classification, the authors of this study used the support vector machine (SVM) algorithm for binary classification problems. SVM learns from the training set, transforms the original feature space into high-dimensional space, and finds a hyperplane to sort data into two categories (Heisele et al., 2001). The combination of HOG features and SVM classifier achieved good recognition rate of pedestrian and face in previous studies (Huang et al., 2012; Son et al., 2010). Some studies have differentiated stress, anxiety, or depression from mentally well participants by different methods of features extraction and categorization (Cohn et al., 2009; Pediaditis et al., 2015; Prasetio et al., 2018). For example, Cohn et al. (2009) achieved accuracy of 79% for depression recognition using AAM and SVM. Prasetio et al. (2018) extracted facial features via HOG, difference of Gaussians and discrete wavelet transform, and then reached an accuracy of 82% for classifying stress through convolutional neural network. These results indicated the potential of facial expression recognition to effectively detect these unpleasant emotional states.

To the best of the authors’ knowledge, the use of face recognition as a mean of screening for distress in patients with cancer has not been analyzed. Distress can be regarded as a more complex facial expression involving depression, anxiety, and stress. Using facial features to recognize distress is a possible method. Therefore, the purpose of this study was to analyze the distress prevalence and related factors in patients with cancer, to extract HOG features of facial expression, and to use SVM as the classifier to better identify patients with significant distress.

Methods

Theoretical Framework

The theoretical framework for explaining the interaction of nursing discipline with computer vision and machine learning is shown in Figure 1. As branches of artificial intelligence, computer vision and machine learning are applied as virtual assistants to handle complex medical problems related to medical images diagnoses, progression prediction, and disease management (Rajkomar et al., 2019). These new technologies can help nurses expand their assessment of patients’ mental health through metrics, such as facial expression, and provide guidance for personalized nursing interventions (Clancy, 2020).

Nursing is a distinct healthcare discipline that promotes health and alleviates pain based on diagnosis and treatment and human response (American Nurses Association, 2010). Nurses are in a key position to identify and manage psychological problems in patients with cancer (Legg, 2011). As a bridge between technology and patient response, nursing can advance the development of computer vision and machine learning to better meet the needs of patients. The transformation of computer vision and machine learning to clinical nursing use requires long-term practice. The authors of this article present a study design framework to identify distress in patients with cancer, which may contribute to the integration of computer vision and machine learning in nursing practice.

Participants

The authors established a database by recruiting hospitalized patients in the cancer center of Sichuan University West China Hospital in Chengdu, China, from July to October 2019. Inclusion criteria were: a diagnosis of cancer by pathological examination, age of 18 years or older, and the ability to understand and answer questionnaires.

Based on the medical history, neurophysical examination, and computed tomography/magnetic resonance imaging scanning, patients with mental confusion or organic psychotic illness were excluded. The institution ethics commission of West China Hospital of Sichuan University approved the research, and all patients provided informed consent.

Procedure

Eligible patients were identified by their attending physicians or nurses, who asked for the initial consent of patients. Two oncology nurses (N. Zhu and H. Xue) with 10 years of nursing experience and 6 years of psychological care experience approached the patients and explained the purpose and procedures of study. The privacy of patients was protected. Patients who agreed to participate signed an informed consent document and completed the DT (NCCN, 2020; Roth et al., 2000) and Hospital Anxiety and Depression Scale (HADS) (Zigmond & Snaith, 1983) self-report questionnaires. The cancer-related information was confirmed by medical records. In addition, the authors video recorded patients’ faces during completion of the questionnaires. Facial images of 16 patients were unqualified, likely related to face posture or lighting conditions. Data for 232 patients were included in the analyses (see Figure 2). The sample size calculation by PASS, version 11, showed that at least 210 patients (42 with distress [prevalence = 0.2]) needed to be included in the study when the sensitivity and specificity were 0.75.

Psychological Measurement

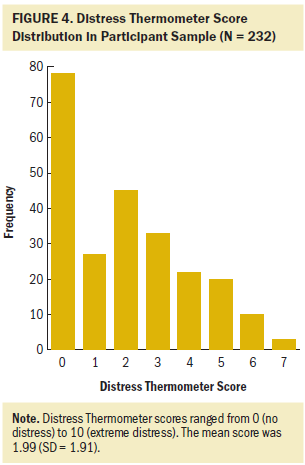

The DT is a visual analog measure of psychological distress rated from 0 (no distress) to 10 (extreme distress) (NCCN, 2020). Patients were asked to choose the most appropriated degree to describe their distress in the past seven days. A meta-analysis of 42 studies about the DT (cutoff score of 4) reported good sensitivity of 0.81 (95% CI [0.79, 0.82]) and specificity of 0.72 (95% CI [0.71, 0.72]) (Ma et al., 2014). The Chinese version of the DT (Zhang et al., 2010) (cutoff score of 4) also yielded optimal sensitivity (0.8–0.87), specificity (0.7–0.72), and test-retest reliability (r = 0.8, p < 0.01) in 574 patients with cancer (Tang et al., 2011). A guideline from the NCCN suggested that a DT score of 4 or greater indicates obvious distress (Riba et al., 2019).

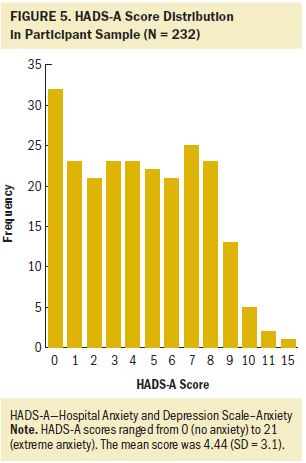

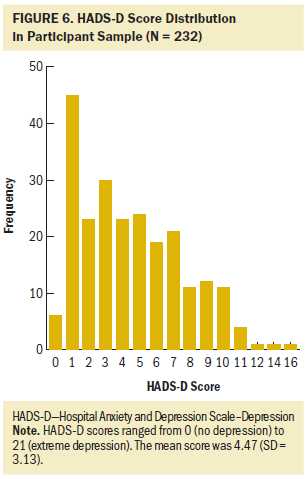

The HADS total score (HADS-T) includes two conjoint subscales: 7 items for anxiety (HADS-A) and 7 items for depression (HADS-D) (Bjelland et al., 2002). Each item has four options (scored on a scale from 0 to 3), and patients select the one that best meets their feelings in the past week. The HADS cutoff threshold was uncertain; however, according to meta-analyses, frequently used cutoff values are 14 or 15 for HADS-T, and 8 or 9 for HADS-A and HADS-D in patients with cancer (Ma et al., 2014; Mitchell et al., 2010). The Chinese version of HADS displayed good construct validity (confirmatory fit index > 0.95) and internal consistency reliability (Cronbach alpha > 0.85) for 641 patients with cancer (Li et al., 2016).

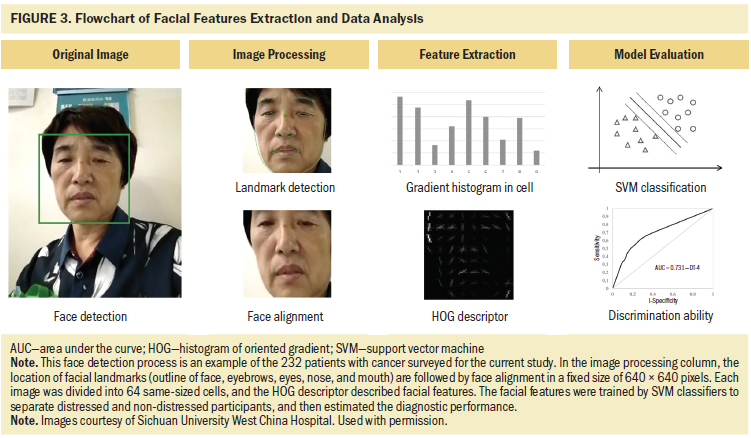

Face Detection and Alignment

The procedures of facial expression recognition and distress classification are presented in Figure 3. The authors used the Viola–Jones algorithm to detect the human face in images captured from videos (Viola & Jones, 2001), then applied rotation and intensity deviation to each face. The ears were excluded from the images of faces. Next, cascaded pose regression framework (Dollar et al., 2010) was used to locate the facial landmarks, which included outline of the face, eyebrows, eyes, nose, and mouth. After the detection of facial landmarks, the authors aligned the face to compare the faces photographed from different angles and eliminate the errors caused by different postures (Brunelli, 2009). Because the eyes were most stable in all facial elements, the authors found the straight-line distance between two eyes and calculated the inclination angle between the straight line and the horizontal line, then rotated the image according to inclination angle, and adjusted the image size based on coordinates of eyes. The nose and mouth were automatically aligned vertically with the eyes because they were not related to the head position. A fixed size of 640 × 640 pixels for each image was use in the analysis.

Facial Feature Descriptor

The HOG descriptor is a local statistic of edge orientations of image gradients (Calvillo et al., 2016). First, the contrast of images was adjusted by standardization and normalization to reduce the negative effect of local shadow and light changes on the images. Then, the images were divided into 64 small connected regions called cells. The gradients (size and direction) of each pixel in the cell were calculated to capture the contour and further decrease the light interference. After this step, the authors of the current study computed the HOG directions for each cell as the feature vector (nine dimensions), and connected the feature vector of every four adjacent cells as the feature vector of a block (4 multiplied by 9 dimensions). Each block has 31 dimensional vectors after dimensional reduction by principal component analysis (Felzenszwalb et al., 2010). Finally, the set of blocks histograms represents the feature descriptor of images.

Distress Level Estimation

The SVM is a classifier for two-category data to find the optimal decision boundary, called hyperplane, that minimizes bias and error (Heisele et al., 2001). To avoid accidental results, the cross-validation was repeated 10 times. Patients were randomly divided into the training group and validation group at a ratio of 7:3. In addition, the facial features of training set were labeled with scores of psychometric questionnaires. The cutoff values of positive objects were 4 for DT, 14 or 15 for HADS-T, and 8 or 9 for HADS-A and HADS-D. Finally, the authors calculated the average values of accuracy, sensitivity/true positive rate, specificity/true negative rate, and area under the curve (AUC) of receiver operating characteristic (ROC) curve to estimate the overall effectiveness in validation sets. All statistical analyses were conducted using Python®, version 3.7.

Results

Sample Characteristics

As shown in Table 1, a sample size of 232 patients (213 female and 19 male) were included in this study, with a median age at first diagnosis of 48 years (range = 20–72 years). Most of the patients were married (96%) and received middle school or lower education (57%). The type of cancer consisted of breast cancer (86%), nasopharyngeal carcinoma (7%), lymphoma (3%), and others (glioblastoma, neuroblastoma, and melanoma, 5%). Fifty-three percent of patients had advanced cancer (stage III–IV), and 70% of patients had normal activities of daily living based on Eastern Cooperative Oncology Group performance status (PS) score. At the time of questionnaire evaluations, 212 patients were undergoing chemotherapy and 7 patients had concomitant radiation therapy/chemotherapy in the past month. The average months since diagnosis was 19.3 (range = 0.5–192 months).

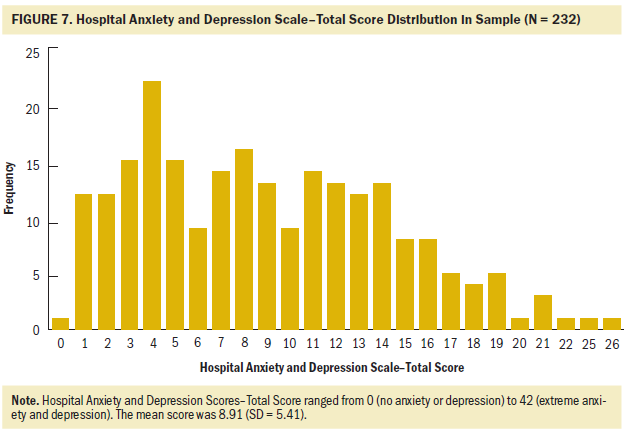

Distribution of Distress Thermometer and Hospital Anxiety and Depression Scale Scores

Among the 232 patients, the authors found that 51 (22%) scored greater or equal to 4 on the DT, 44 (19%) and 22 (10%) patients had scores over the threshold of 8 or greater or 9 or greater, respectively, for HADS-A. In addition, the positive rate was 17% (8 or greater) and 13% (9 or greater) for HADS-D, and 22% (14 or greater) and 16% (15 or greater) for HADS-T. The scores of the DT, HADS-D, and HADS-T showed obvious skewed distribution to the left (see Figures 4–7). As many as half of the scores ranged from 0 to 2 on the DT, and the most common DT score was 0. In addition, the authors used chi-square and t-test analysis to compare the differences of demographic and clinical characteristics between groups divided by cutoff value of 4 on the DT. These variables were not significantly correlated with the detection of distress in patients.

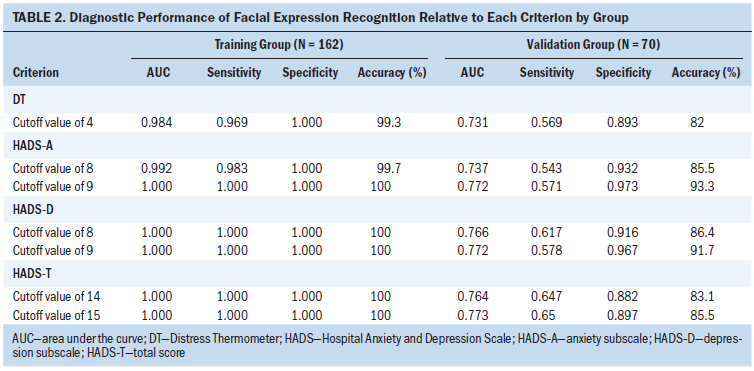

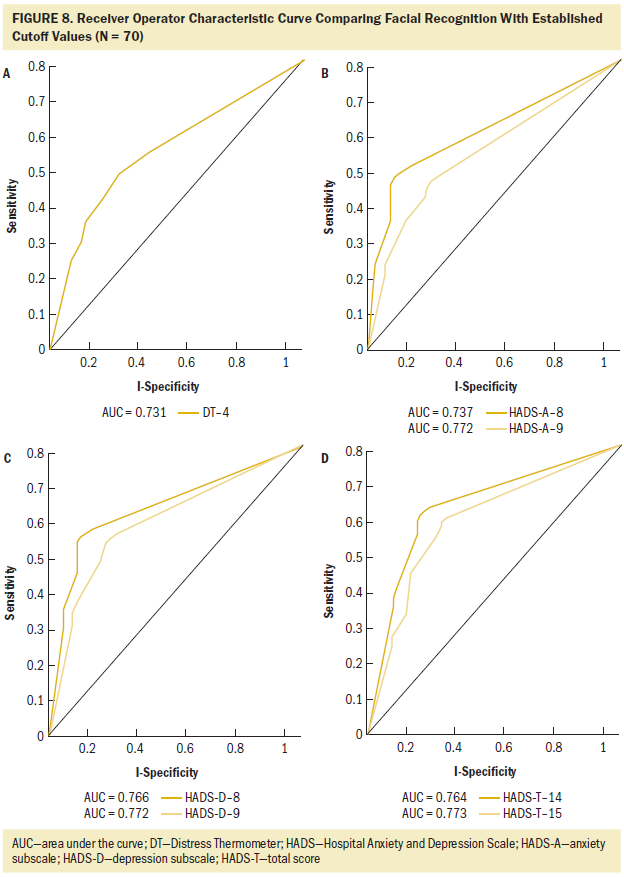

Diagnostic Performance of Facial Expression Recognition

The results of distress recognition by a machine learning model based on facial expression features are listed in Table 2. The positive cases were defined by the DT (cutoff score of 4), HADS-A (cutoff score of 8 or greater or 9 or greater), HADS-D (cutoff score of 8 or greater or 9 or greater), or HADS-T (cutoff score of 14 or greater or 15 or greater). When using the DT as the criterion, the classification accuracy reached 82% with an AUC of 0.731 (sensitivity = 0.569, specificity = 0.893) in the validation group (see Figure 8A). The facial recognition yielded an AUC of 0.737 (sensitivity = 0.543, specificity = 0.932, accuracy = 95.5%) for HADS-A cutoff value of 8. When patients were classified by HADS-A in threshold of 9, the analyses obtained a higher AUC of 0.772 (sensitivity = 0.571, specificity = 0.973, accuracy = 93.3%) (see Figure 8B). In addition, the proposed model yielded an AUC of 0.766 (accuracy = 86.4%) for HADS-D (8 or greater) and an AUC of 0.772 (accuracy = 91.7%) for HADS-D (9 or greater) (see Figure 8C). In addition, good performances were noted in HADS-T, with a cutoff value of 14 (AUC = 0.764, sensitivity = 0.647, specificity = 0.882, accuracy = 83.1%) and a cutoff value of 15 (AUC = 0.773, sensitivity = 0.65, specificity = 0.897, accuracy = 85.5%) (see Figure 8D).

Discussion

Distress is considered as the sixth vital sign of cancer care (Bultz & Carlson, 2005) and is associated with negative outcomes, such as decreased treatment adherence, prolonged hospital stays, and poor quality of life (Grassi et al., 2015; Mitchell et al., 2011). The NCCN guideline (Riba et al., 2019) suggests to screen for distress in all patients with cancer, and monitor and manage distress as part of timely routine care. In the current study, the authors proposed a machine learning model based on facial expression to automatically identify distress in patients with cancer. The facial HOG features with SVM classifier obtained stable effectiveness when tested on the criteria of DT and HADS. The highest accuracy was 82% for DT-defined cases, 85.5% to 93.3% for HADS-A cases, 86.4% to 91.7% for HADS-D, and 83.1% to 85.5% for HADS-T. These results indicate the significant diagnostic value of facial expression recognition in classifying distress for patients with cancer.

Significant distress has a high prevalence (20%–52%) in patients with cancer (Riba et al., 2019). In the current article, by using the DT as the criterion, the authors found that 22% of 232 patients had a significant level of distress (DT score of 4 or greater). A previous study reported a total prevalence of significant distress of 24.2% in 4,815 patients with cancer in China (Zhang et al., 2010). The resistance to psychological problems, called mental illness stigma, is still common in Chinese patients. Although the term “distress” is more acceptable and less stigmatizing, many patients still choose a low score or even a score of zero to hide their true feelings during doctor–patient communication. That might explain the lower detection rate and skewed distribution of DT and HADS scores as compared with studies in other countries. In terms of relevant risk factors of distress, there was no significant correlation between clinical characteristics and obvious distress (DT score of 4 or greater) in this study. Previous studies indicated association of higher DT score with separated, divorced, or widowed status, female gender, younger age, and poorer performance status (Enns et al., 2013; Garvey et al., 2018; Kim et al., 2011), but there is still no consensus. Therefore, the influence of these factors on psychological distress requires further investigation.

Previous studies have reported the application of facial expression recognition in the classification of depression, with an accuracy of 75% to 85% on the AVEC 2014 public dataset (Valstar et al., 2014; Yang et al., 2017; Zhou et al., 2018). In terms of self-built database, Gavrilescu and Vizireanu (2019) proposed a three-layer architecture consisting of AAM, SVM, and a feed-forward neural network in 128 White patients. The classification accuracy of depression, anxiety, and stress was 87.2%, 77.9%, and 90.2%, respectively. In the current study, the authors focused on psychological distress in 232 Chinese patients with cancer, which also obtained high accuracy of greater than 82%. Compared with DT (sensitivity: 0.79–0.82, specificity: 0.71–0.72) (Ma et al., 2014), facial recognition showed lower sensitivity (0.543–0.65) but higher specificity (0.882–0.973) relative to HADS, which could reduce the misdiagnosis of false-positive cases. The questionnaire-based methods dominate the diagnosis of distress; however, the authors suggest facial recognition as a supplementary screening tool to make up for shortcoming of questionnaires. On a computer using an i5-9400k processor, the average time for extracting the corresponding face image from the video was 0.18 seconds for each patient. The face detection, facial landmarks location, and face alignment required 1.23 seconds for each image. Finally, the average time of HOG feature extraction and 10 cycles of training and test by SVM was 4.25 seconds. The total time needed for predicting the distress by facial expression recognition was 5.66 seconds for each patient, faster than the time required to complete the DT and HADS (5 minutes). Therefore, the facial expression recognition is expected to be combined with monitors or mobile devices to form a real-time processing system to monitor the distress level and equip healthcare professionals to make better decisions. The integration of manual evaluation and machine learning will be a future trend to improve clinical diagnoses, decision making, and patient care.

Limitations

Several limitations of this study should be discussed. First, the study was limited as a single-center investigation with potential selection bias. The sample was primarily Chinese women with advanced breast cancer, which indicates limited diversity of cancer type, stage, and ethnicity. Hewahi and Baraka (2011) found that White patients had a higher accuracy of emotion recognition, whereas Black patients had the worst accuracy, and the overall accuracy increased from 75% to 83.3% after considering ethnic group in a model. Reyes et al. (2018) suggested that cross-cultural differences rather than ethnicity led to decreased expression recognition accuracy. Therefore, the generalizability of the current results should be considered within these limitations. The effectiveness of facial expression recognition needs to be further evaluated and confirmed by multi-ethnic and multicultural populations. Some confounding factors, such as previous emotional state, temperament, and expression features of individuals should also be considered in facial expression analysis. In addition, the sensitivity was relatively lower in validation groups, which was probably related to the insufficient number of distressed patients. Large and diverse data sets are essential for improving accuracy of machine learning models. The authors will continue to expand the sample size for more robust results. The facial expression recognition in static images has the advantages of easy operation and convenient application but provide limited information in a single image. Therefore, dynamic videos with other feature descriptors and machine learning methods can be used to improve diagnosis modeling in future studies. This approach can also be extended to detect other psychological disorders in patients.

Implications for Nursing

Research suggests that distress is more common in hospitalized patients than outpatients (Stonelake-French et al., 2018). Oncology nurses play an important role in dealing with emotional discomfort in patients with cancer (Legg, 2011). They can observe the patient’s performance and behavior changes, as well as recognize and evaluate distress over time. Nurses provide guidance and support from diagnosis to recovery, which can decrease cancer-related distress and increase patient satisfaction (Swanson & Koch, 2010). Nurses can also communicate patients’ symptoms and needs to the healthcare team and conduct further examination and therapy to improve prognosis and quality of life. The self-assessment method by questionnaires is most widely used but may be subjective and one-sided during its implementation. Results of this preliminary study indicate the clinical feasibility of facial expression features and machine learning methods in evaluating distress level. Facial expression recognition requires easily available data (images or videos) and does not require a high-performance computer. After data processing and model training, the average time for distress identification is only a few seconds. Therefore, this approach is efficient and can be used for dynamic assessment of emotional state, which may help nursing staff conduct acute care and update intervention plans during hospitalization. Facial expression recognition can contribute to the identification and management of distress in patients with cancer without increasing the workload of the healthcare team.

Conclusion

The proposed machine learning model had good discrimination capability to identify distress in patients with cancer by analyzing facial expression. The automatic recognition of facial expression was suggested as an effective screening tool and assistant option to traditional questionnaires, which indicated broad future of application in clinical treatment and nursing. Timely diagnosis of distress may be helpful to guide interventions and improve the quality of life of patients with cancer.

The authors gratefully acknowledge Jie Shen, PhD, for providing technical support and statistical analysis.

About the Author(s)

Linyan Chen, MD, is a graduate student in the Department of Biotherapy in the Cancer Center at the State Key Laboratory of Biotherapy at West China Hospital at Sichuan University and at the Collaborative Innovation Center in Chengdu, both in China; Xiangtian Ma, ME, is a graduate student in the School of Information and Software Engineering at the University of Electronic Science and Technology of China in Chengdu; Ning Zhu, RN, and Heyu Xue, RN, are both clinical nurse specialists in the Department of Head and Neck Cancer in the West China School of Nursing at West China Hospital at Sichuan University; Hao Zeng, MD, is a graduate student in the Department of Biotherapy in the Cancer Center at the State Key Laboratory of Biotherapy at West China Hospital at Sichuan University and at the Collaborative Innovation Center in Chengdu, both in China; Huaying Chen, RN, is a clinical charge nurse in the Department of Head and Neck Cancer in the West China School of Nursing at West China Hospital at Sichuan University; Xupeng Wang, PhD, is a research associate in the School of Information and Software Engineering at the University of Electronic Science and Technology of China in Chengdu; and Xuelei Ma, MD, is an assistant professor in the Department of Biotherapy in the Cancer Center at the State Key Laboratory of Biotherapy at West China Hospital at Sichuan University and at the Collaborative Innovation Center in Chengdu, both in China. No financial relationships to disclose. Linyan Chen, Xiangtian Ma, Ning Zhu, and Xupeng Wang are co-first authors of the article. Xiangtian Ma and Xuelei Ma contributed to the conceptualization and design. Xiangtian Ma, Zhu, Xue, Zeng, and Chen completed the data collection. Xiangtian Ma, Wang, and Xuelei Ma provided statistical support. Chen, Xiangtian Ma, Zeng, and Wang provided the analysis. Chen, Zhu, Xue, Zeng, and Chen contributed to the manuscript preparation. Ma can be reached at drmaxuelei@gmail.com, with copy to ONFEditor@ons.org. (Submitted March 2020. Accepted August 8, 2020.)